Useful but Flawed

AI is a sharp kitchen knife in a world of shaky hands

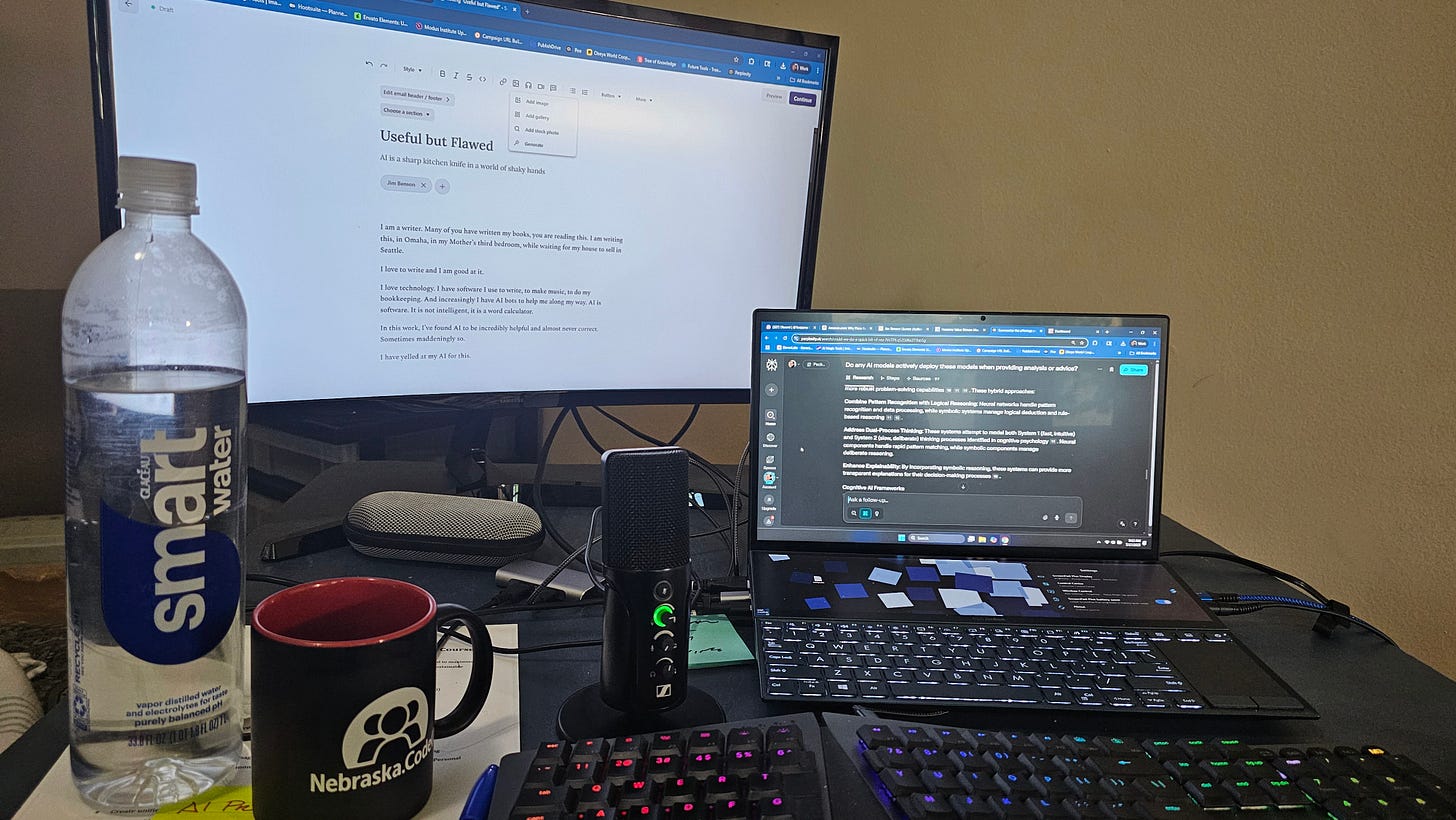

I am a writer. Many of you have written my books, you are reading this. I am writing this, in Omaha, in my Mother’s third bedroom, while waiting for my house to sell in Seattle.

I love to write and I am good at it.

I love technology. I have software I use to write, to make music, to do my bookkeeping. And increasingly I have AI bots to help me along my way. AI is software. It is not intelligent, it is a word calculator.

In this work, I’ve found AI to be incredibly helpful and almost never correct. Sometimes maddeningly so.

I have yelled at my AI for this.

AI and Humans

All models are wrong, some are useful. ~ George Box

AI is a model. ~ Jim Benson

AI is powerful. Flawed but useful. Hyped but prone to errors that non-experts are unlikely to see. AI does not hallucinate, it literally makes things up to satisfy its customer. And the customers love that.

Humans (like you and me) gravitate to / run on a diet of confirmation bias. We are always prone not only to seek out information we want, but interpret information in a way that satisfies us.

AI runs on supplying the results of our confirmation bias. We eat this up.

AI is sometimes useful enough to know it placating us but is often flawed enough not to.

I have rarely been given completely correct advice by AI. I have often been given useful advice.

Humans: You still have to interpret the results of the word calculators.

Bosses of Humans: You cannot lay people off for AI and expect reliable results.

System One Thinking Is AI Hype

System One Thinking is our fast, automatic, and intuitive way of interpreting the world. It is reflex. It seeks to make life effortless and instinctive. In good times it allows us to make quick judgments and decisions without a lot of processing. It is often incorrect and often predictably incorrect.

System Two Thinking is slower, more deliberate, and analytical. This is us engaging conscious effort, logical reasoning, and careful consideration. This is how humans take time to solve complex problems or notice opportunities. This is wrong much less than System One, but the reflex is faster than the thoughtful act, easier than the thoughtful act, and “just feels right.”

System Two takes a lot more energy and frustration than System One. Therefore, people gravitate towards System One a painful percentage of the time. This means people resort to not analyzing, not solving, and not considering more often than not.

Both of these come from Daniel Kahneman and Amos Tversky’s work and are covered in the Kahneman’s Thinking Fast and Slow and my Why Plans Fail.

Human beings frequently get themselves into trouble by trusting AI with providing information or analysis that has not gone through either system one or two. In other words, while AI information is derived (processed through a model), it is not actually interpreted (thought about with intelligence or wisdom).

Me as an example: I have loaded all my writing into AI agents to help me keep track of what I have written and to help organize my thoughts. Every time (actually every time) the agent has given me back a response to a need, it has been useful and it has been flawed.

Every AI backend I have tried has misquoted me to me. It makes up things I say, sometimes in enlightening ways and sometimes in horrible ways that contradict everything I believe. I know the difference because I’m Jim Benson. I know the data set extremely intimately.

Imagine if you don’t know the data set intimately.

The Hype of AI is that it will “do our work for us”. The other day I saw someone say that AI was their “expert in things that they aren’t an expert in.”

Danger…Will…Robinson.

They want the results. Their system one wants the convenience of not thinking. AI Hype is a totally a play on System One thinking, when it could be the opposite. AI for me is an incredibly powerful tool to remove a lot of overhead from my system two thinking.

How We Solve Problems and Engage Opportunities (with and without AI)

Over the next few weeks, I’m planning to write about how we spot issues and opportunities and respond to them. I will be doing this while providing different visualizations (kanban, etc) that can help not just use these models, but trigger them.

It is clear that a large language model and the relational processing power behind it can provide significant benefit in helping us spot problems to solve, opportunities we might miss, and actions where our system two thinking or collaboration are required.

This, right here, is the future of business and us working as human beings.

Are we using the tools at our disposal to quickly assess, address, and achieve completion of any problem, opportunity, or change that comes our way?

Do we understand our work, our product, and our customer well enough to interpret the data?

Do we know the capabilities of our tools and of our team such that we respond to the right things at the right time?

If You Want to Start Today

Just ping me on LinkedIn and we can set up a time to talk about AI, your work flow, and your team. I’d love to help save some jobs and get your team seeing your work and using your tools better.

You can also check out our Humane Value Stream Mapping course, which will help you see how your work flows and where these tools can help (not replace) the team.

"Humans (like you and me) gravitate to / run on a diet of confirmation bias. We are always prone not only to seek out information we want, but interpret information in a way that satisfies us."

One would think this observation would be a commonplace in AI discussions. One would be so wrong.

AI plays our confirmation bias so much because *we* prompt it. We seed all our biases, preconceptions, prejudices, and what have you into how we structure our queries. No wonder that we get equally biased answers.

A case in point:

- ask an AI model to make some writing suggestions (like, propose a title or anything)

- suggest your own proposal

- ask the model about feedback

Almost universally, it will be heavily biased toward praise, not critique.

It's a feel-good activity.

I also want to load all my work (especially my blogs!) into an AI assistant.

- Which one did you use?

- Are you keeping those results private? If so, how?

thanks.